In 2009, an Israeli drone flying over the Gaza Strip transmitted back to its command station an image of a telltale rocket trail streaking toward Israeli territory. Many kilometers away, a young Israeli operator, Capt. Y, quickly maneuvered the unmanned aircraft to get a look at the young Palestinian who had just launched the deadly missile. Y’s drone squadron already had authorization to take him out. In an instant, a rocket struck the hidden launch site, followed by a flash of fire.

When the smoke cleared, Y saw images of the shooter lying flat on the ground. Twenty seconds passed. And then Y saw something even more remarkable — the dead man began to move.

Severely wounded, the Palestinian began to claw his way toward the road. Y could clearly see the man’s face, and in his youth and determination Y must have recognized something of himself. So, now Y and his team had a decision to make: Would they let the wounded terrorist escape, or circle the drone back and finish him off?

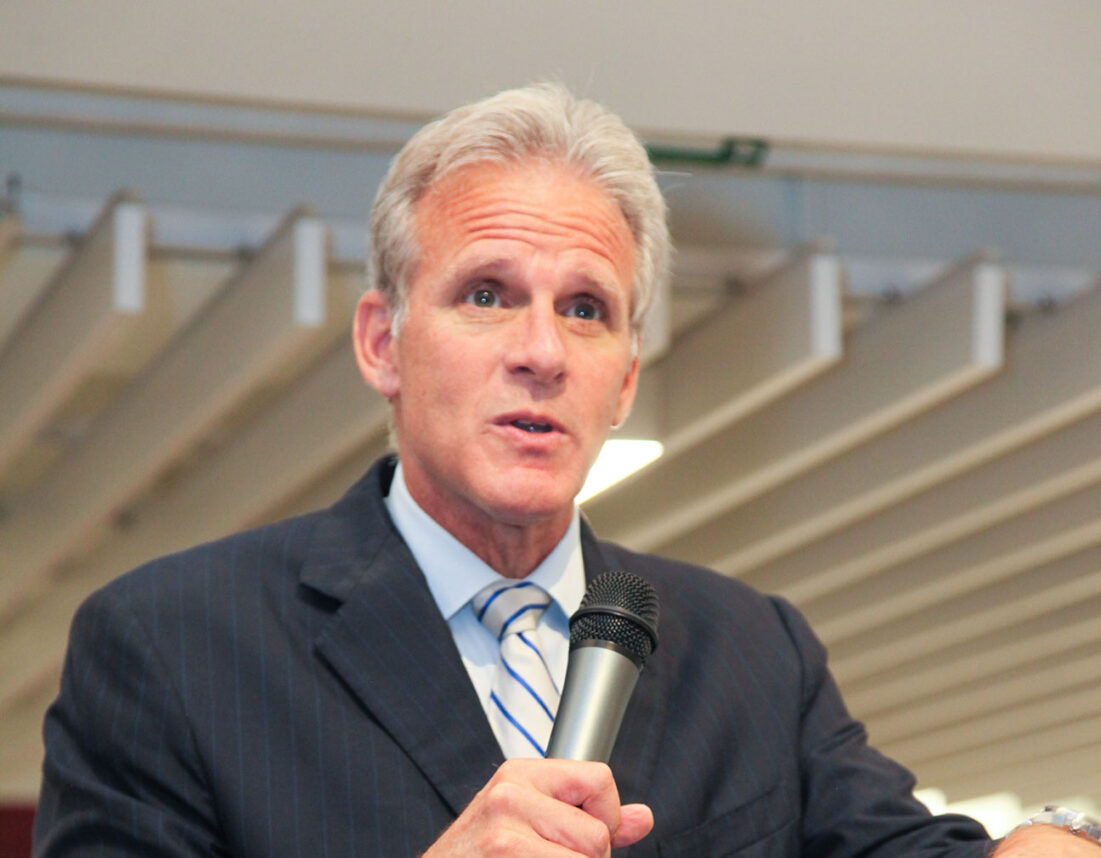

Y told me this story in the lobby of the Beverly Hilton Hotel in Beverly Hills. He is 23, wiry and intense. When I arrived for our interview, arranged through the Friends of the Israel Defense Forces, Y was sitting in a small atrium, getting in a last smoke.

For security reasons, I cannot use his real name, so I agreed to refer to the captain as Y, and to his fellow drone operator, a lieutenant, as M.

M is calmer. She is 25, has large blue eyes and wears her blond hair pulled back into a ponytail — Scarlett Johansson’s tougher twin sister.

Unmanned aerial vehicles, or UAVs, as drones are otherwise known, have been in use militarily since World War I. In 1917, the Americans designed the Kettering “Bug” with a preset gyroscope to guide it into enemy trenches. In World War II, the Nazis deployed “the Fritz,” a 2,300-pound bomb with four small wings and a tail motor. But it is only in the past few years that UAVs have made almost-daily headlines. These days, the United States, in particular, has widely employed UAVs in the far reaches of Pakistan and Afghanistan in its fight against terrorists. As recently as Nov. 1, a U.S. drone strike killed Pakistani Taliban leader Hakimullah Mehsud, demonstrating once again the deadly effectiveness of, and the growing reliance upon, these weapons of war.

But like all revolutionary new weapons, this success comes at a price, and it’s a price we in America prefer not to check. Just a day before I met with the two Israelis in late October, two influential human rights groups released reports asserting that the number of civilian deaths resulting from America’s largely secret “drone wars” was far greater than the government had claimed. Human Rights Watch reported that since 2009, America’s anti-terrorist drone strikes in Yemen had killed at least 57 civilians — more than two-thirds of all casualties resulting from the strikes — including a pregnant woman and three children. In Pakistan, Amnesty International found that more than 30 civilians had died from U.S. drone strikes between May 2012 and July 2013 in the territory of North Waziristan.

To Americans, news of anonymous civilians dying in faraway places may not resonate deeply, even if we are the ones who killed them. But these two humanitarian groups’ reports point to the rapid increase in the United States’ use of unmanned aerial vehicles as weapons of war, and they underline the lack of clear international ethical codes to guide that use.

Who gets to use drones? How do commanders decide whom to target, whom to spy on? If a drone operator sitting in a command room in Tampa, Fla., can kill a combatant in Swat, in northern Pakistan, does that make downtown Tampa a legitimate military target, as well?

I wanted to learn more about the morality of this advancing technology, so I talked to people who have studied drones, who have thought about their ethical implications, and who, like Y and M, actually use them. I hoped that through them I might come to understand how we, as a society, should think about the right way to use these remarkable, fearsome tools.

I wanted to know if there exists, in essence, a Torah of drones.

From 12,000 feet up, the Heron drone Capt. Y was piloting that day during Operation Pillar of Defense offered a perfect view of the wounded Palestinian.

“You see everything,” Y told me. “You could see him lying on the ground, moving and crawling. Even if you know he’s the enemy, it’s very hard to see that. You see a human being who is helpless. You have to bear in mind, ‘He’s trying to kill me.’ But, in my mind, I hoped somebody would go help him.”

Y’s father is French, and his mother is Israeli. He lives in Beersheba, where his wife is a medical student. Y’s brother was killed in the Second Lebanon War by a Hezbollah rocket while he was piloting a Yasur combat helicopter. Y was 18 at the time.

“I believe some of the way to mourn is to go through the same experience of the man you loved,” Y said.

Lt. M’s parents both are French immigrants to Israel, staunch Zionists, and, she said, she always knew one day she’d be an Israel Defense Forces (IDF) officer.

In Israel, those who cannot complete pilot-training very often enter the drone corps. It may not hold the cachet of becoming an Air Force pilot, but both of these soldiers believe drones are the future.

“I like the idea that every flight you do, you’re helping your fellow citizens,” M said.

“We feel we contribute more than other people,” Y said. “But today, in the modern day, you don’t have to take risks. If you risk your life, it doesn’t mean you contribute more.”

In the United States and Israel, where the reluctance to put boots on the ground is at a high point, the fact that drones offer significant military capabilities with far less risk accounts precisely for the tremendous increase in their use.

Israel, in fact, has led the way. Its effective use of drones during the 1982 Lebanon War rekindled American interest in UAVs. During America’s first Gulf War, in 1991, the U.S. Navy bought a secondhand Pioneer drone from Israel and used it to better aim heavy artillery. At one point during that war, a squad of Iraqi soldiers saw a drone overhead and, expecting to be bombarded, waved a white sheet. It was the first time in history soldiers had surrendered to a drone.

Today, the United States increasingly uses drones for both civilian intelligence — as in Yemen and Pakistan — and militarily. Currently, some 8,000 UAVs are in use by the U.S. military. In the next decade, U.S. defense spending on drones is expected to reach $40 billion, increasing inventory by 35 percent. Since 2002, 400 drone strikes have been conducted by U.S. civilian intelligence agencies.

At least 87 other countries also have drones. Earlier this year, Israel announced it was decommissioning two of its combat helicopter squadrons — to replace them with drones.

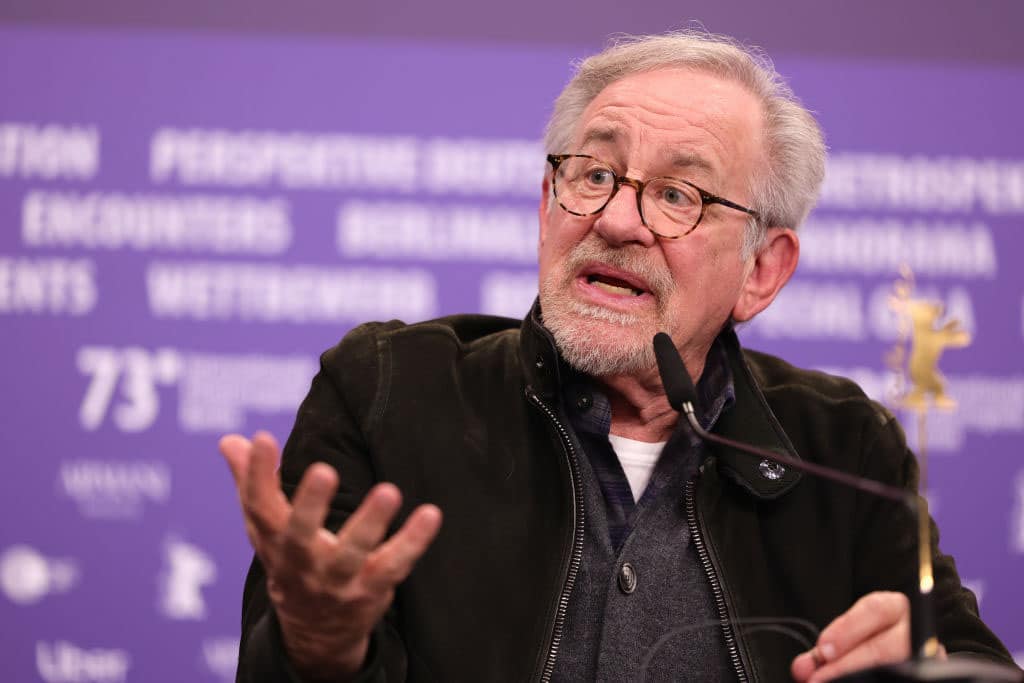

“We’re at the very start of this technological revolution,” Peter Singer, author of “Wired for War: The Robotics Revolution and Conflict in the 21st Century,” told me by phone. “We’re in the World War I period of robotics. The cat’s out of the bag. You’re not going to roll it back. But you do want to set norms.”

Singer’s book, first published in 2009 when the public debate over drone ethics was nonexistent, is still the best road map to a future we all have reason to fear, but must face, in any case.

I called Singer to see where he stands on the ethical issues raised by civilian drone deaths.

Actually, he pointed out, his book dealt largely with military use of these technologies. Even he wouldn’t have predicted such widespread use of drones by surveillance agencies that are unversed in the rules of war and that operate without the safeguards built into military actions.

That, for Singer and others who parse the ethics of drones, is the rub. In the military, there are rules of engagement. There is the risk of court-martial. Strategic training is better in the military than in intelligence agencies.

“One group goes to war college,” Singer said, “the other doesn’t. And it’s very different when you’re a political appointee, rather than a military officer. Some tactics would not be allowed in a military operation.”

I asked Singer for an example. He chose one from the CIA operations just now under scrutiny by human rights groups.

“Double-tapping,” he said. “That would never make its way past a military officer.”

Double-tapping is when an aircraft, manned or not, circles back over a targeted site and strikes a second time — either to finish off the wounded or to take out forces that have rushed in to help. Exactly the ethical question Capt. Y. faced.

When Y saw that he hadn’t killed the Palestinian the first time, he and his team faced one of the most difficult, urgent questions of drone combat: Should they double-tap?

Ethical issues in drone combat come up all the time, M said — in training, in operations and, afterward, in frequent debriefing and analysis.

“I have so many examples of that, I can’t count,” Y told me.

A landmark Israeli Supreme Court decision on targeted killing provides the ethical framework for IDF drone operators.

In 2009, the court found there is nothing inherently wrong with a targeted killing — whether by an F-16, Apache helicopter or unmanned drone.

But, the court added, in order for the action to be acceptable, the soldiers must satisfy three questions:

The first is, what is a legitimate target? The target, the court said, must be an operational combatant seeking to do you harm — not a retired terrorist or someone you want to punish for past sins.

Second, has the target met the threshold level of intelligence? The drone team must have a deep knowledge that its target meets the first condition, verified by more than one source.

Finally, who is the supervising body? There must be independent oversight outside the hands of the drone operators and the IDF.

To professor Moshe Halbertal, these three conditions form the basis for the moral exercise of deadly drone force.

Halbertal is a professor of Jewish philosophy at Hebrew University, the Gruss Professor of Law at the New York University School of Law and one of the drafters of the IDF’s code of ethics.

Shortly before Halbertal came to Los Angeles to serve as scholar-in-residence Nov. 1-3 at Sinai Temple, I spoke with him about Israel’s experience with drones. From what he could tell, he said, Israel has a more developed ethical framework.

In the American attacks, Halbertal said, “The level of collateral damage is alarming.”

In Israel, he said, “There is a genuine attempt to reduce collateral killing. If this were the level of collateral damage the IDF produces, it would be very bad.”

The fact that drones are less risky is not what makes their use more prone to excesses, Halbertal said.

“Because military operations involve more risk, there is more care in applying them,” Halbertal said. “But, on the other hand, soldiers make mistakes out of fear in the heat of combat that drone operators don’t.”

The danger with drones, he said, is that because the political risks of deploying them, versus deploying live troops, are much less, they can be used more wantonly.

I asked Capt. Y if he’d had experience with collateral damage.

“It’s happened to me,” he said. “We had a target and asked [intelligence officers] if there were civilians in the area. We received a negative. Later, we heard in the Palestinian press that there were casualties. We checked, and it was true — a father and his 17-year-old son. What can we do? I didn’t have a particular emotion about it.”

The people who know the people getting killed do have emotions about it. And that grief and anger can work to undo whatever benefits drone kills confer.

“I say every drone attack kills one terrorist and creates two,” Adnan Rashid, a Pakistani journalist, told me. In the Swat Valley, where he lives, the fear of American drones and the innocent lives they’ve taken has been one of the extremists’ best recruiting tools, Rashid said.

If that’s the case, better oversight and clearer rules for drones may be not just the right thing to do but in our self-interest as well.

No war is ever clean. But that doesn’t mean drone use should increase without the implementation of the kind of national, and international, norms Singer now finds lacking.

If the United States doesn’t adopt the kinds of oversight Israel already has in place, at the very least, Singer believes, we should move the drone program from the intelligence agencies to the military.

It’s a call that has increasingly vocal support from America to Pakistan. Sen. John McCain (R.-Ariz.), a member of the Senate Foreign Relations Committee as well as the U.S. Senate Committee on Armed Services, argued Congress could exercise better oversight of a drone program operated by the military.

“Since when is the intelligence agency supposed to be an Air Force of drones that goes around killing people?” McCain said recently on Fox News. “I believe that it’s a job for the Department of Defense.”

“The killing is creating more anger and resulting in the recruitment of more people to pursue revenge,” former Pakistani Minister of State Shahzad Waseem told me. “The minimum you can do is to come up openly with some kind of treaty or set of rules to give it a legal shape, mutually accepted by all sides.”

Will Americans rise up to make a stink over this? That may be a tall order for a populace that seems to take each revelation of intelligence community overreach — from drone deaths to National Security Agency spying — with a collective yawn. Will the international community begin to create a framework that at least sets standards for drone use and misuse?

Unfortunately, humans, particularly in developing technology, have a way of advancing faster on the battlefront than on the legal or moral fronts. It took the Holocaust, Singer pointed out, for humanity to come up with the Geneva Conventions of 1949. What fresh hell must befall us before we at least attempt to codify behavior for the Age of Drones?

And even if we set standards and nations abide by them, it seems inevitable that the very nature of drones one day will allow non-state actors — the likes of al-Qaeda — to follow the lead of Hezbollah in using them, as well.

If, in the 1940s and ’50s, the best and the brightest scientific minds went into nuclear physics — and gave us the atomic bomb — these days, those talents are all going toward artificial intelligence. At the high end, a future filled with autonomous, intelligent killing drones awaits us.

At the low end, consider this: Singer also serves as a consultant for the video game “Call of Duty,” for which he was asked to envision a homemade drone of the not-too-distant future. He and others came up with a Sharper Image toy helicopter, controlled by an iPad and mounted with an Uzi. A promotional team actually made a fully functional version of this weapon for a YouTube video, and 17 million hits later, the Defense Department telephoned, perturbed.

“Unlike battleships or atomic bombs,” Singer told me, “the barriers to entry for drones are really low.”

That doesn’t mean we should give up on establishing ethical norms for nations — or people — but we do need to keep our expectations in check.

We may be heading toward a world of what Halbertal describes, in the Israeli context, as “micro wars,” where each human is empowered with military-like capacity and must make his or her own ethical choices on the spot.

Cap. Y made his own moral choice that day during Operation Pillar of Defense. He watched as the wounded Palestinian man managed to get to the road, where a group of civilians came to his aid.

Why didn’t Y “double-tap”?

“He was no longer a threat,” Y told me, matter-of-factly. “And several people gathered around him who weren’t part of the attack.” That was that: The rules of engagement were clear.

In a micro-war, a soldier in combat — not just generals at a central command — must determine in the heat of battle who is a terrorist and who is a civilian, who shall live and who shall die.

In his book, Singer envisions a future in which artificial intelligence will also enable us to provide ethical decision-making to the machines we create. It would be our job to program Torah into these machines — and then let them do with it as they will.

Much like Someone has done with us.

More news and opinions than at a Shabbat dinner, right in your inbox.

More news and opinions than at a Shabbat dinner, right in your inbox.